For this project, we were shown how the Kinect camera (an add-on device for the Xbox 360 console) can be used to capture 3D space and for motion capture (aka ‘mo-cap’). The device is about £120, and is used on Microsoft’s Xbox 360 console for playing interactive games where your body is the controller. Our lecturer stated that technology like this before the Kinect came out would cost about £18,000! So there have been massive leaps in technology, in terms of motion capture. The Kinect camera was not built to be used on computers; it is only thanks to open source software and plugins that we are able to use the camera on devices other than the Xbox 360. (Update) Microsoft has recently brought out a version of the Kinect camera built to plug into Windows PCs; at near double the price, but it has better motion capture accuracy [1].

The Kinect camera works by shooting thousands of Infra-Red points as it scans its view plain and then records the depth from the reflection. For colour, it is like any other camera. So you end up with a one side facing 3D room. If you have two cameras pointing at one central point from different positions, then you can start to build a bigger picture. The motion capture is down to the software used. The software scans for an identifiable figure shape (stood-up straight with arms stretched out to the sides), and from there records the progression of movement. On screen, the body movement is represented as a skeleton wireframe. The motion captured can be imported into 3DS Max with a dummy figure attached to the skeleton, which can be placed in a virtual 3D environment.

Our brief for the project was to creating any form of media using the Kinect camera. So for example, an amalgamation of 3D heads like a Mount Rushmore or a motion capture of someone dancing.

I and a class mate (Stephen Dunn) chose to take a series of shots of me walking up towards the Kinect camera. With that we then created an animatic to represent some sort of walking mud man. The Kinect camera is not that accurate at scanning, especially at great depth. So the people look like a big blob figures.

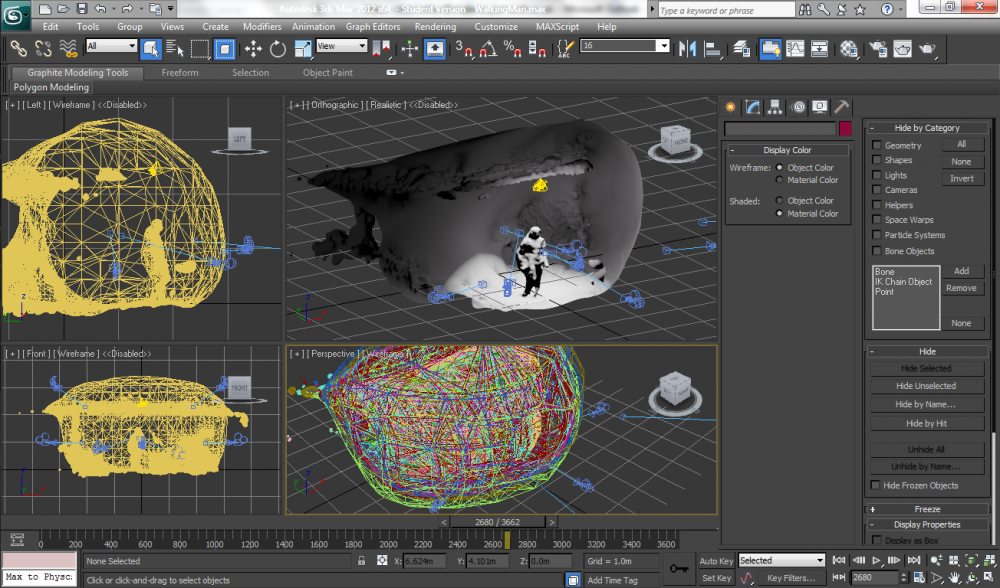

So we started the shots of me walking. The Kinect takes a few seconds to process each shot, which meant I had to pause walking every part-step. We took a total of 16 shots and imported them into 3DS Max. During the transfer we had to do a couple of processes to convert each 3D captured shot. The Kinect captures by dots, so each dot in 3D space has to be joined up into a wireframe.

Image: (Of the 4 quarters…) Top-right; Shows one wireframe shaded. Bottom-right; Shows all wireframes centred.

Once in 3DS Max each of the 16 wireframes needed centring, scaling up in the 3D scene, and animated. The animation involved changing the opacity of each 3D shot so that they animated sequentially, merging into one another. We realised later on that a texture had to be applied first before changing the opacity of each shot, which meant we had to start the process again. We chose a lava texture with a bump map applied to it. The bump map made the texture seem bumpy, to represent real lava [2]. Ideally we wanted an animated lava texture [3], but because of the way the wireframe was not one complete shape, 3DS Max would not show the animated texture. Once satisfied, we added spot lights and virtual viewpoints in the scene to capture/render the scene.

After 9 hours of rendering 3 different viewpoints at 213x120pixels (my laptop fan was running so loud!), we had the footage to piece together in Adobe After Effects and Adobe Premiere. Because the film was silent, we searched the Internet for free sound effects to use. We found sound effects for howling wind and bubbles blown in a glass of water. The sounds were stretched out to create a more low-pitched and prolonged sound. [4][5]

To improve upon it in the future, we would probably render it at a higher resolution with more time. A definite feature would be figuring out how to add a moving lava texture. Also we could probably look at the motion capture route; though it would mean we would lose the shapes (blobs) that the Kinect creates from still shots.

Since starting the project I have learnt how to use the Kinect camera, its linking software, 3DS Max, Adobe After Effects, and Adobe Premiere – All of which I had never used before.

[1] http://uk.pc.gamespy.com/articles/121/1217718p1.html [2] Lava bitmap – http://ayelie-stock.deviantart.com/art/Lava-texture-2-67168268 [3] http://www.3dm3.com/tutorials/ps/ [4] Drone Airy Hollow Swells Distant Steam Releases Alien Planet Lava Bed – http://www.soundsnap.com/node/55470 [5] Bubbles – http://www.soundsnap.com/node/35302